Guidelines for creating Snapshot copies of Infinite Volumes

- The volume must be online. You cannot create a Snapshot copy of an Infinite Volume if the Infinite Volume is in a Mixed state because a constituent is offline.

- The Snapshot copy schedule should not be less than hourly. It takes longer to create a Snapshot copy of an Infinite Volume than of a FlexVol volume. If you schedule Snapshot copies of Infinite Volumes for less than hourly, Data ONTAP tries but might not meet the schedule. Scheduled Snapshot copies are missed when the previous Snapshot copy is still being created.

- Time should be synchronized across all the nodes that the Infinite Volume spans. Synchronized time helps schedules for Snapshot copies run smoothly and restoration of Snapshot copies function properly.

- The Snapshot copy creation job can run

in the background. Creating a Snapshot copy of an Infinite Volume is a

cluster-scoped job (unlike the same operation

on a FlexVol volume). The operation spans multiple nodes in the cluster. You can force the job to run in the background by setting the -foreground parameter of the volume snapshot create command to false - After you create Snapshot copies of an Infinite Volume, you cannot rename the copy or modify the comment or SnapMirror label for the copy.

Guidelines for managing Snapshot copy disk consumption

- You cannot calculate the amount of disk space that can be reclaimed if Snapshot copies of an Infinite Volume are deleted.

- The size of a Snapshot copy for an Infinite Volume excludes the size of namespace mirror constituents.

- If you use the df command to monitor Snapshot copy disk consumption, it displays information about consumption of the individual data constituents in an Infinite Volume—not for the Infinite Volume as a whole.

- To reclaim disk space used by Snapshot copies of Infinite Volumes, you must manually delete the copies.

Create Snapshots

Syntax: snap create [-A | -V] <volume name> <snap shot name>in above scenario ‘snap create’ is a command to create snapshot.

ARK> snap create -V vol0 testingsnap #### for volume level snapshot creation

ARK> snap create -A aggr0 testingsnap #### for aggregate level snapshot creation

‘-V’ is a option to create snapshot in volume level.

‘vol0’ is the volume name.

‘testingsnap’ is the snapshot name.

Rename Snapshots

Syntax: snap rename [-A | -V] <volume Name> <Old-snap name> <New snap Name>

ARK> snap rename -V vol0 testingsnap realsnap #### Renaming Volume level snapshot

ARK> snap rename -A aggr0 testingsnap realsnap #### Renaming Aggregate level snapshot

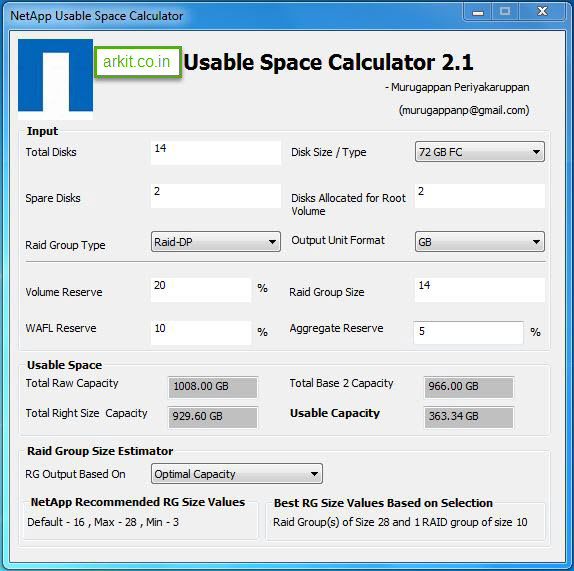

Snap reserve space

Syntax: snap reserve [-A | -V] <volume Name> <percentage>

ARK> snap reserve -V vol0 ### verify volume reserve snap percentage

ARK> snap reserve -V vol0 20 ### Change snap reserve space to 20%

Snap Delete

Syntax: snap delete [-A | -V ] <volume Name> <snapshot name>

ARK> snap delete -V vol0 realsnap ####Deleting vol0 realsnap snapshot

snap reclaimable size

Syntax: snap reclaimable <volume name> <snapshot Name>

ARK> snap reclaimable vol0 snapshot1

snap reclaimable: Approximately 780 Kbytes would be freed.

Snap Restore

ARK> snap restore -V -t vol -s snapshot1 vol1above command will restore entire volume. before restoration it will ask you for the confirmation.

snap autodelete

snap autodelete is the option we have to to delete old snapshots automatically.ARK> snap autodelete vol1 showLets see all detailed explanation about snapshots

ARK> snap autodelete vol1 on

ARK> snap autodelete vol1 off